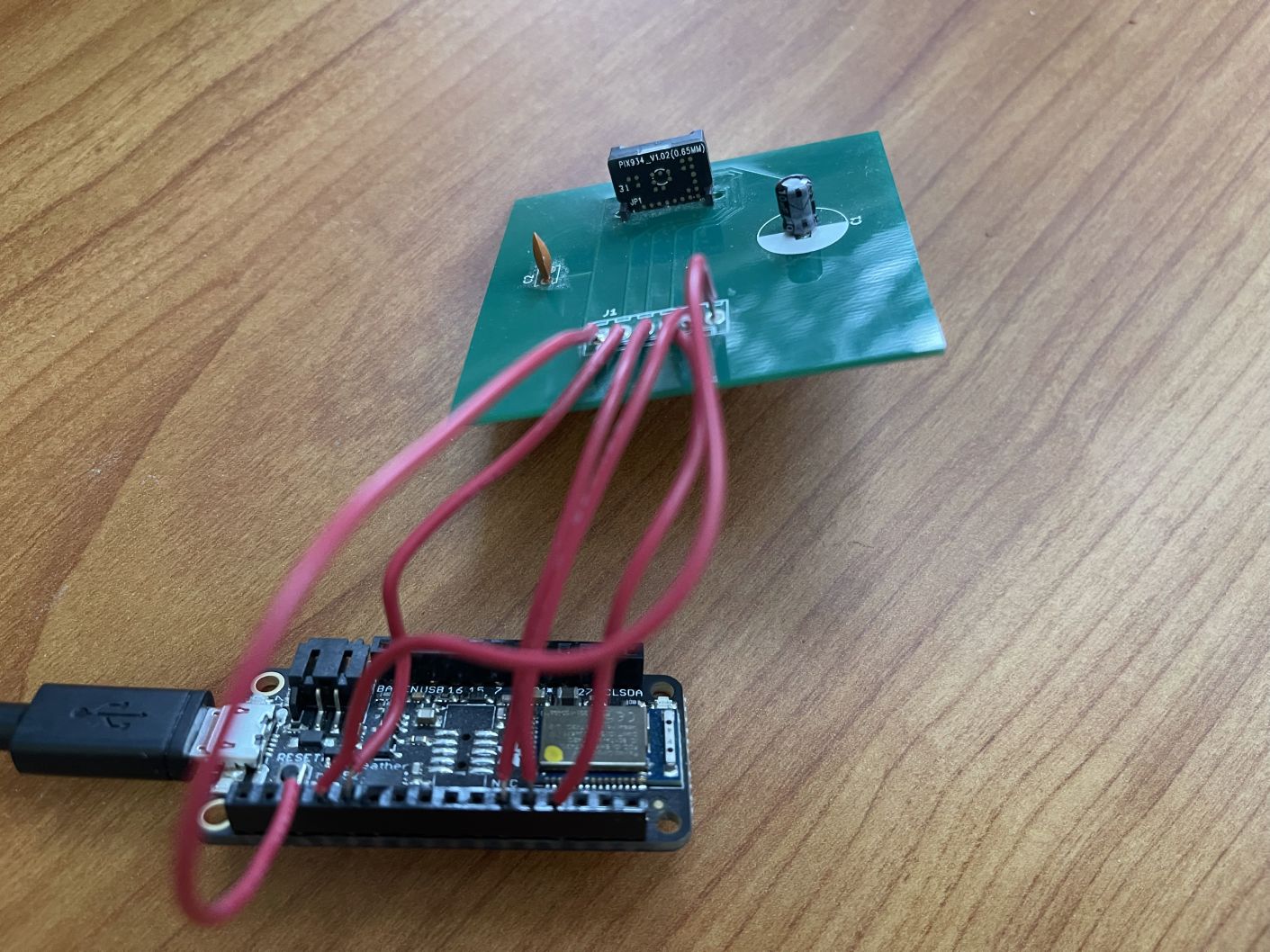

RoBart is a multimodal LLM-powered (Claude or GPT-4) robot that uses an iPhone 12 Pro Max for its sensors, user interface, and compute! ARKit is used for SLAM as well as mapping of the environment with LiDAR. A salvaged hoverboard provides the motors, battery, and base chassis. An Adafruit Feather board communicates with the iPhone via Bluetooth and controls the motors.

|

The cool thing about RoBart is that there is no task-specific code. It listens for human speech and then the LLM agent figures out how to perform the task using the very limited selection of control commands exposed to it.

In addition to RoBart, I've also been learning how to use imitation learning (e.g., ACT) to control robot arms. Stay tuned for more. If you're building in the space and looking for a collaborator, please reach out!